This set of MCQ(multiple choice questions) focuses on the Deep Learning NPTEL Week 2 answers

Course layout

Answers COMING SOON! Kindly Wait!

Week 1: Assignment Answers

Week 2: Assignment Answers

Week 3: Assignment Answers

Week 4: Assignment Answers

Week 5: Assignment Answers

Week 6: Assignment Answers

Week 7: Assignment Answers

Week 8: Assignment Answers

Week 9: Assignment Answers

Week 10: Assignment Answers

Week 11: Assignment Answers

Week 12: Assignment Answers

NOTE: You can check your answer immediately by clicking show answer button. This set of “Deep Learning NPTEL Week 2 answers ” contains 10 questions.

Now, start attempting the quiz.

Deep Learning NPTEL 2023 Week 2 Quiz Solutions

Q1. Suppose if you are solving a four class problem, how many discriminant function you will need for solving?

a) 1

b) 2

c) 3

d) 4

Answer: d) 4

Q2. Two random variable X1 and X2 follows Gaussian distribution with following mean and covariance.

Which of following will is true.

a) Distribution of X1 will be more flat than the distribution of X2

b) Distribution of X2 will be more flat than the distribution of X1

c) Peak of the both distribution will be same

d) None of above

Answer: a) Distribution of X1 will be more flat than the distribution of X2

Q3. Which of the following is true with respect to the discriminant function for normal density?

a) Decision surface is always othogonal bisector to two surfaces when the covariance matrices of different classes are identical but otherwise arbitrary

b) Decision surface is generally not orthogonal to two surfaces when the covariance matrices of different classes are identical but otherwise arbitrary

c) Decision surface is always orthogonal to two surfaces but not bisector when the covariance matrices of different classes are identical but otherwise arbitrary

d) Decision surface is arbitrary when the covariance matrices of different classes are identical but otherwise arbitrary

Answer: b)

Q4. In which of following case the decision surface intersect the line joining two means of two class at midpoint? (Consider class variance is large relative to the difference of two means)

a) When both the covariance matrices are identical and diagnoal matrix

b) When the covariance matrices for both the class are identical but otherwise arbitrary

c) When both the covariance matrices are identical and diagonal matrix, and both the class has equal class probability

d) When the covariance matrices for both class are arbitrary and different

Answer: c)

Q5. The decision surface between two normally distributed class w1 and w2 is shown on the figure. Can you comment which of the following is true?

Answer: c)

Q6. For minimum distance classifier which of the following must be satisfied?

a) All the classes should have identical covariance matrix and diagonal matrix

b) All the classes should have identical covariance matrix but otherwise arbitrary

c) All the classes should have equal calss probability

d) None of above

Answer: c) All the classes should have equal calss probability

Q7. You found your designed software for detecting spam mails has achieved an accuracy of 99%, i.e., it can detect 99% of the spam emails, and the false positive (a non-spam email detected as spam) probability turned out to be 5%. It is known that 50% of mails are spam mails. Now if an email is detected as spam, then what is the probability that it is in fact a non-spam email?

a) 5/104

b) 5/100

c) 4.9/100

d) .25/100

Answer: b) 5/100

Q8. Which of the following statements are true with respect to K-NN classifier?

1. In case of very large value of k, we may include points from other classes into hte neighbourhood.

2. In case of too small value of k the algorithm is very sensitive to noise.

3. KNN classifier classify unknown samples by assigning the label which is most frequent among the k nearest training samples.

a) Statement 1 only

b) Statement 1 and 2 only

c) Statement 1, 2, and 3

d) Statement 1 and 3 only

Answer: c) Statement 1, 2, and 3

Q9. You have given the following 2 statements, find which of these option is/are true in case of k-NN?

1. In case of very large value of k, we may include points from other classes into the neighbourhood.

2. In case of too small value of k the algorithm is very sensitive to noise.

a) 1

b) 2

c) 1 and 2

d) None of these

Answer: c) 1 and 2

Q10. The decision boundary of linear classifier is given by the fillowing equation.

4×1 + 6×2 – 11 = 0

What will be class of the following two unknown input example? (Consider class 1 as positive class, and class 2 as the negative class)

a1 = [1, 2]

a2 = [1, 1]

a) a1 belongs to class 1, a2 belongs to class 2

b) a2 belongs to class 1, a1 belongs to class 2

c) a1 belongs to class 2, a2 belongs to class 2

d) a1 belongs to class 1, a2 belongs to class 1

Answer: a) a1 belongs to class 1, a2 belongs to class 2

Deep Learning NPTEL 2022 Week 2 answers

Q1. Suppose if you are solving an n-class problem, how many discriminant function you will need for solving?

a) n-1

b) n

c) n+1

d) n-2

Answer: b)

Q2. If we choose the discriminant function gi(x) as a function of posterior probability. i.e. gi(x) = f(p(wi/x)). Then which of following cannot be the function f()?

a) f(x) = ax, where a > 1

b) f(x) = a-x, where a > 1

c) f(x) = 2x + 3

d) f(x) = exp(x)

Answer: b)

Q3. What will be the nature of decision surface when the covariance matrices of different classes are identical but otherwise arbitrary? (Given all the classes has equal class probabilities)

a) Always orthogonal to two surfaces

b) Generally not orthogonal to two surfaces

c) Bisector of the line joining two mean, but not always orthogonal to two surface.

d) Arbitrary

Answer: c)

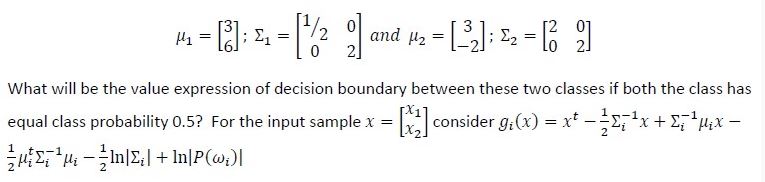

Q4. The mean and variance of all the samples of two different normally distributed class w1 and w2 are given

a) x2 = 3.514 – 1.12x1 + 0.187x12

b) x1 = 3.514 – 1.12x2 + 0.187x22

c) x1 = 0.514 – 1.12x2 + 0.187x22

d) x2 = 0.514 – 1.12x2 + 0.187x22

Answer: a)

Q5. For a two class problem, the linear discriminant function is given by g(x) = aty. What is the updating rule for finding the weight vector a. Here y is augmented feature vector.

a) Adding the sum of all augmented feature vector which are misclassified multiplied by the learning rate to the current weigh vector.

b) Subtracting the sum of all augmented feature vector which are misclassified multiplied by the learning rate from the current weigh vector

c) Adding the sum of the all augmented feature vector belonging to the positive class multiplied by the learning rate to the current weigh vector

d) Subtracting the sum of all augmented feature vector belonging to the negative class multiplied by the learning rate from the current weigh vector.

Answer: a)

Q6. For minimum distance classifier which of the following must be satisfied?

a) All the classes should have identical covariance matrix and diagonal matrix

b) All the classes should have identical covariance matrix but otherwise arbitrary

c) All the classes should have equal class probability

d) None of above

Answer: c)

Q7. Which of the following is the updating rule of gradient descent algorithm? Here ▽ is gradient operator and n is learning rate.

a) an+1 = an – n▽F(an)

b) an+1 = an + n▽F(an)

c) an+1 = an – n▽F(an-1)

d) an+1 = an + n▽F(an-1)

Answer: a)

Q8. The decision surface between two normally distributed class w1 and w2 is shown on the figure. Can you comment which of the following is true?

a) p(w1) = p(w2)

b) p(w2) > p(w1)

c) p(w1) > p(w2)

d) None of the above

Answer: c)

Q9. In k-nearest neighbour’s algorithm (k-NN), how we classify an unknown object?

a) Assigning the label which is most frequent among the k nearest training samples

b) Assigning the unknown object to the class of its nearest neighbour among training sample

c) Assigning the label which is most frequent among the all training samples except the k farthest neighbor

d) None of these

Answer: a)

Q10. What is the direction of weigh vector w.r.t. decision surface for linear classifier?

a) Parallel

b) Normal

c) At an inclination of 45

d) Arbitrary

Answer: b)

<< Prev: Deep Learning NPTEL Week 1 Answers

>> Next: Deep Learning NPTEL Week 3 Answers

The above question set contains all the correct answers. But in any case, you find any typographical, grammatical or any other error in our site then kindly inform us. Don’t forget to provide the appropriate URL along with error description. So that we can easily correct it.

Thanks in advance.

For discussion about any question, join the below comment section. And get the solution of your query. Also, try to share your thoughts about the topics covered in this particular quiz.